Intelligence

at the Edge

A protocol for distributed machine learning that brings AI capabilities to existing infrastructure without centralized data processing

The Gap We're Filling

Centralized AI systems fail at the edge due to latency, privacy risks, and connectivity constraints.

Legacy Infrastructure

Trillions invested in industrial equipment not designed for AI. Replacement is costly and disruptive.

Data Sovereignty

Centralized systems risk non-compliance with GDPR, CCPA, and industry regulations.

Real-Time Demands

Critical applications require millisecond decisions that cloud systems cannot deliver.

Distributed Intelligence

Collaborative AI across heterogeneous devices without centralizing sensitive data.

How It Works

Federated Learning

Models train locally. Only encrypted updates are shared, preserving privacy.

Hardware Attestation

Cryptographic proofs verify computation integrity on untampered hardware.

Edge-First Design

Processing at data sources eliminates latency for real-time inference.

Design Principles

Privacy-First

Data never leaves the source. Only encrypted model updates are shared.

Protocol-Agnostic

Compatible with Modbus, OPC-UA, MQTT, and LoRaWAN without modification.

Decentralized

No central authority. Proof-of-authority consensus ensures security.

Industries & Applications

Enabling AI at the edge across critical industries.

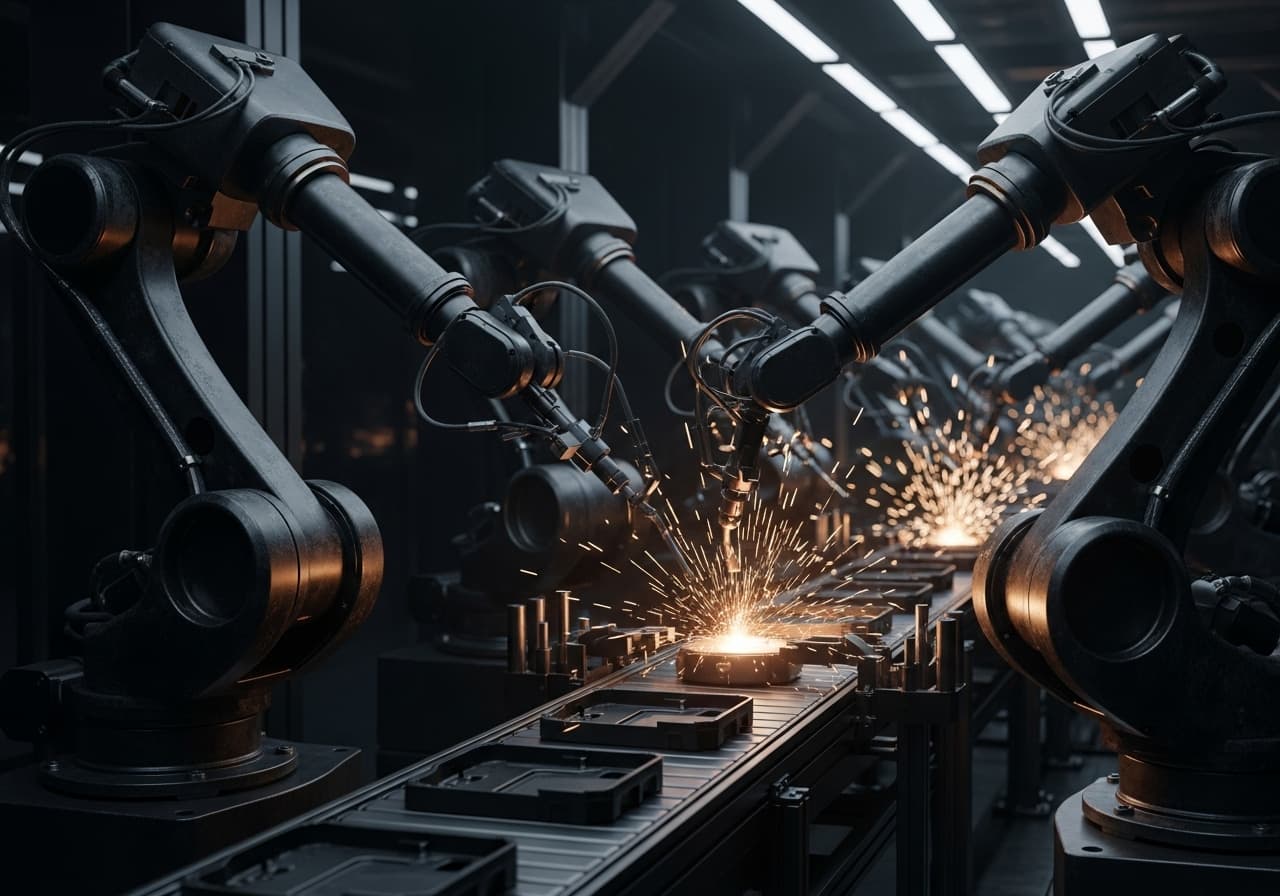

Manufacturing

Predictive maintenance and quality control with federated learning across factory equipment.

Agriculture

Distributed learning across farms for yield optimization without sharing sensitive crop data.

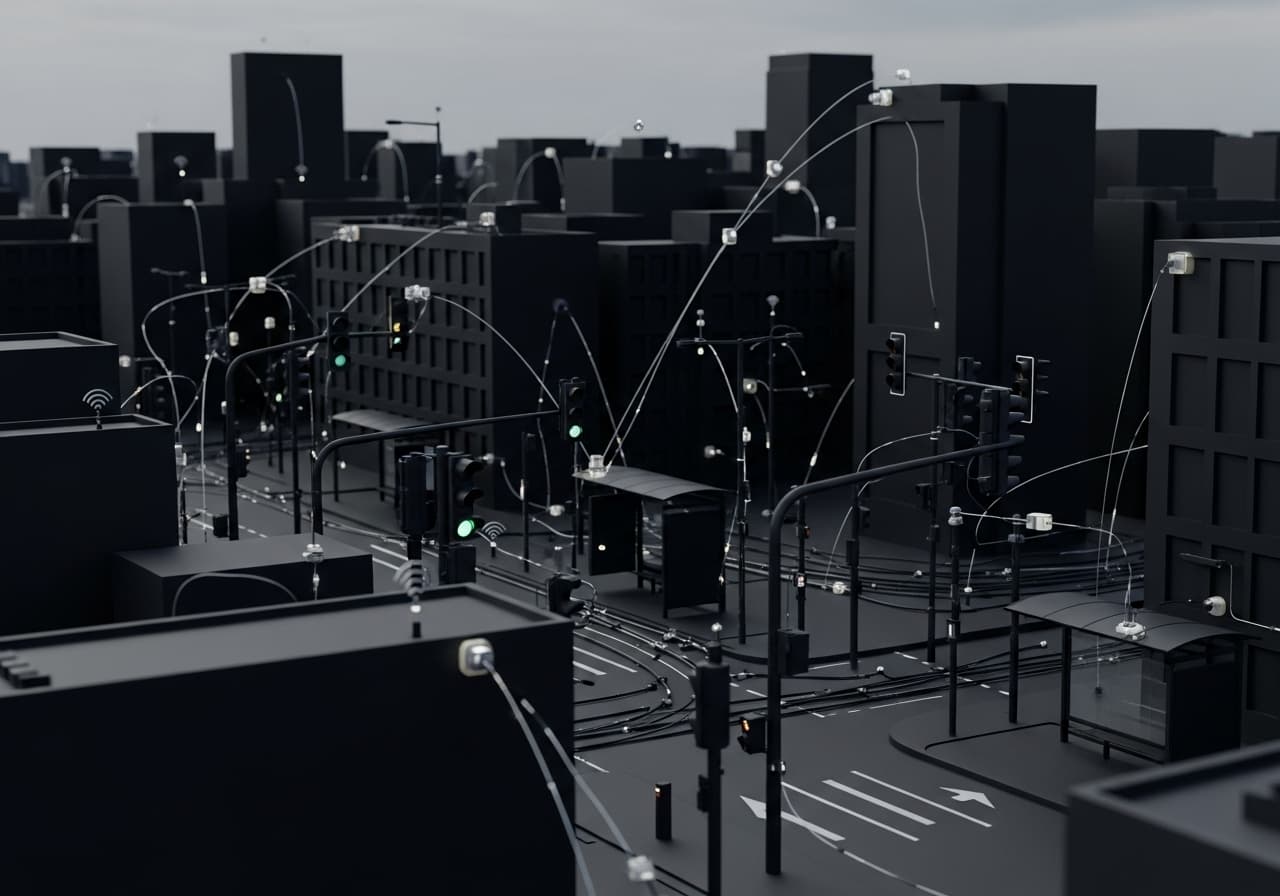

Smart Cities

GDPR-compliant camera and sensor data processing for traffic and emergency response.

Environmental

Real-time monitoring of air quality, water systems, and climate with edge analytics.

Robotics & Automation

Enable autonomous systems to learn collaboratively while maintaining real-time responsiveness and data privacy.

Fleet Learning

Warehouse robots and delivery systems share insights without exposing facility layouts

Collaborative Robots

Industrial cobots optimize tasks while preserving manufacturing trade secrets

Autonomous Vehicles

Fleet-wide learning with cryptographic verification for safety-critical decisions

Modern Edge Hardware

Optimized for the latest generation of edge AI accelerators with GPUs, NPUs, and specialized AI chips.

GPU Acceleration

NVIDIA Jetson platform from Nano to AGX Orin delivering up to 275 TOPS for vision and robotics.

NPU Efficiency

Intel, AMD, and Qualcomm NPUs with up to 99 TOPS combined for on-device AI inference.

Edge TPU

Google Coral ultra-efficient accelerators delivering 4 TOPS at 2W for vision applications.

Framework Support

AI Frameworks

TensorFlow, PyTorch, ONNX Runtime for cross-platform deployment at the edge

Development Tools

NVIDIA Isaac, Edge Impulse, ROS 2 for robotics and embedded systems

Supported Platforms

Compatible with industry-leading hardware and cloud infrastructure

Jetson • CUDA

Coral • Cloud

Movidius • Core

Ryzen AI

Snapdragon

Cortex • Ethos

Edge Accelerators

GPUs, NPUs, and TPUs for high-performance AI inference

Cloud Integration

Hybrid edge-cloud workflows with major providers

Industrial Systems

Compatible with embedded systems and IoT devices

Enterprise Ready

Built with security, compliance, and compatibility at the core.

- TEE Execution

- Hardware Attestation

- Differential Privacy

- End-to-End Encryption

- Modbus

- OPC-UA

- MQTT

- LoRaWAN

- GDPR

- CCPA

- HIPAA

- ISO 9001

- Federated Learning

- Ensemble Models

- Proof of Authority

- Edge-First

Join the Edge Revolution

We're building the future of distributed intelligence. Connect with us to learn more about deploying AI at the edge.